Core Principles of Generative Search Optimization

How LLM-Based Answer Engines Work

AI search engines, like ChatGPT, operate using a Retrieval-Augmented Generation (RAG) pipeline, which is quite different from traditional search indexing. Instead of merely matching keywords, these systems go through a four-step process to deliver answers [5][2].

First, the engine interprets your query to uncover the intent and pinpoint key entities. Then, it retrieves potential documents from across the web. Next comes the passage extraction phase, where the AI evaluates chunks of content (typically 128–512 tokens) based on their relevance, factual accuracy, and ability to stand alone [5]. Finally, the engine synthesizes these passages into a cohesive answer and decides which sources to cite.

Unlike traditional search engines, this process breaks content into semantic passages, each scored individually for relevance and self-containment. This means that even well-optimized pages might lose visibility if their key information is scattered [5]. This segmentation forms the basis for the ranking signals discussed below.

Ranking Signals for Generative Search

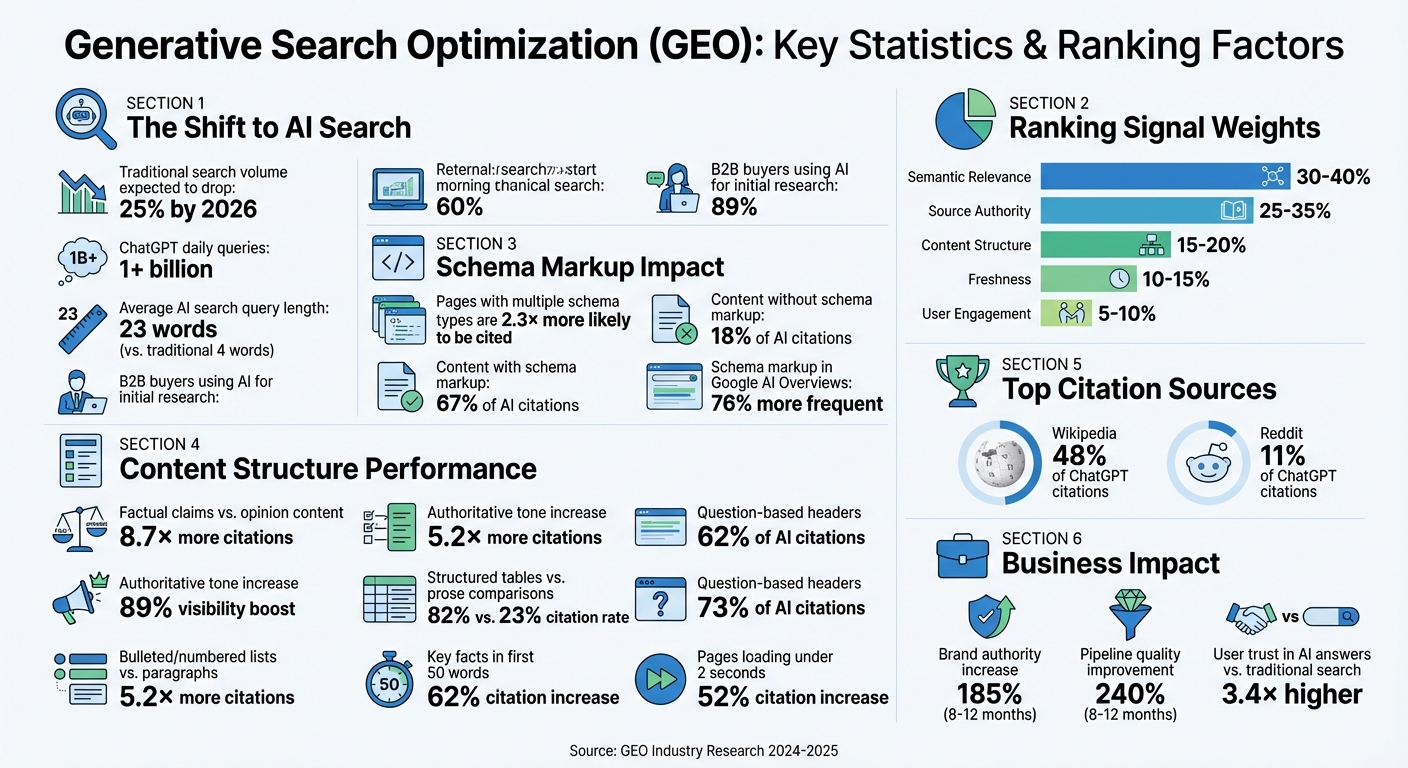

AI search engines rely on several signals to determine which sources to cite. Semantic relevance is the most important, accounting for 30–40% of the decision-making process. This is measured through embedding similarity and entity overlap [5]. Source authority follows closely at 25–35%, with factors like domain trust, backlink profiles, and author credentials playing a major role [5].

Content structure contributes 15–20% to the ranking. AI tools prefer well-organized content with clear headers, lists, and logically structured passages [5]. Freshness makes up another 10–15%, with recent publication dates and updated content being favored [5]. Finally, user engagement signals, when available, account for the remaining 5–10% [5].

Interestingly, pages that use multiple schema types are 2.3 times more likely to be cited than those relying on a single schema [5]. Additionally, 67% of AI citations come from content with schema markup, compared to just 18% from unmarked pages [10].

Aligning Content with Relevance and Trust

To increase the likelihood of being cited by AI engines, your content needs to be both relevant and credible. Content that includes clear, factual claims is cited 8.7 times more often than opinion-heavy material [10].

Establishing E-E-A-T - Experience, Expertise, Authoritativeness, and Trustworthiness - is essential for AI citations. Models actively look for author credentials, verified claims, and consistent entity naming throughout your content [5][2]. A study by Princeton revealed that rewriting passages with an authoritative tone increased AI visibility by 89% [1].

"The more proof of a human author, rather than AI, the better. Domain authority becomes even more important as well."

– Baird Hall, Digital Marketing Expert [9]

To boost your content's AI citation potential, ground your claims in solid data and include expert quotes. Write in concise, self-contained paragraphs (around 200 words) to ensure coherence when segmented [5]. Use assertive and confident language, avoiding vague terms like "might" or "possibly" [1]. Inline citations to reputable sources can further enhance your visibility by 30–40% [1][8].